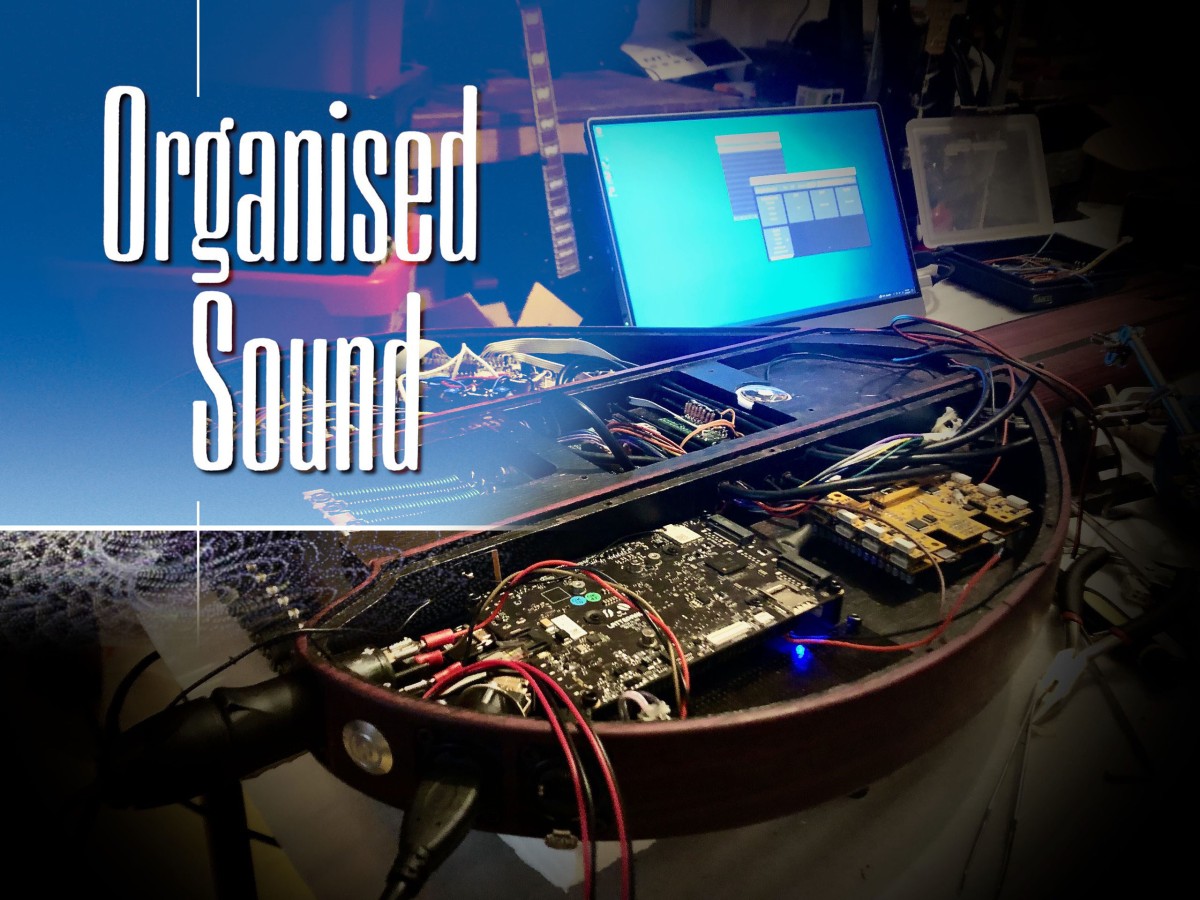

Organised Sound – Call for papers: Embedding Algorithms in Music and Sound Art

Excited to announce that I am co-editing with Thor Magnusson a thematic issue of Organised Sound on the topic of Embedding Algorithms in Music and Sound Art.Please find the full call below and on the journal’s website. The idea of a journal edited issue came after the Embedding Algorithms Workshop that took place at the Berlin Open Lab…