EDIT: following the workshop, we’re now co-editing a thematic issue of Organised Sound on the topic. Here is the call.

The Embedding Algorithms Workshop is an informal meeting of researchers and practitioners working with embedded systems in the fields of musical instrument design, wearable computing, interaction design, and performing arts. During the workshop, the participants will show and discuss:

- designing physical objects (instruments, wearables, and more) that incorporate some algorithmic process that is central to their function or behaviour;

- designing and implementing embedded algorithms;

- using/performing/practising with objects with embedded algorithms;

- and more.

Time: Friday, 26th July 2024, 10:00-18:00

Place: Universität der Künste Berlin – Berlin Open Lab – Mixed Reality Space (aka BOL2) – Einsteinufer 43, 10587 Berlin.

NB: There is a large construction site in front of Einsteinufer 43. Once you have found your way around it and entered the building, go past the reception and through the glass doors. Turn left at the end of the corridor and go past another set of glass doors. BOL 2 is at the very end of the long corridor you will find ahead of you, last door on the right.

The event is open and free to attend. Please register here just so we can get a better idea of how many people to expect.

More info: mail(at)federicovisi(dot)com

Overall schedule:

10:00 – 12:30: presentations/discussions (session 1)

12:30 – 13:30: exhibitions introduction and lunch break

13:30 – 16:00: presentations/discussions (session 2)

16:00 – 16:30: coffee break, exhibitions

16:30 – 18:00: performances and closing remarks

Presenters

(15 min + 10 min Q&A each, distributed in 2 x 2.5-hr sessions)

In no particular order:

- Andrew McPherson

- Teresa Pelinski & Adam Pultz Melbye

- Alberto de Campo & Bruno Gola

- Federico Visi

- Echo Ho

- Eliad Wagner

- Viola Yip

- Nicola Hein

- John MacCallum

- Zora Kutz & Stratos Bichakis

- Friederike Fröbel & Malte Bergmann

Talks

In no particular order:

Alberto de Campo & Bruno Gola: “From intuitive playing to Absolute Relativity – How tiny twists open possibilities for Multi-Agent Collaborative performance”

This talk discusses the sequence of small twists in the long-term development of the NTMI project that opened up new perspectives. These turning points include the idea of replacing analytical control with intuitive influence, opening the interface options from a single custom device to various common interfaces, the idea to enable these influence sources to work simultaneously.

The latest step, adapting the influence mechanisms to be consistently relative, creates further options for multi-agent performance, including networks of influence sources and destinations, which may extend to nonhuman actors.

Eliad Wagner: “Modular synthesisers as cybernetic objects in musical performance”

This presentation (performance demonstration accompanied by discussion) is a result of an ongoing artistic research on the topic. Its focus is twofold: the material algorithms that govern the cybernetic machine behaviour (sound creation) and the human gestures (incl. the points of control that allow them) that facilitate form and meaning.

Central to the examination is the embedding of patch algorithms within the modular synthesiser, an instrument that lacks established techniques, canon, or prescribed usage. Each such interaction with the synthesiser reinvents its structure and interface, resulting in a constant process of instrumental grammatization. In this context, improvisation emerges as a useful and even necessary method for responding to the uncertainty and unpredictability of the machine. In a sense, the embedded algorithm informs both counterpart behaviours – machine and human, and potentially transforms the identity of the human component- from control to participation.

Echo Ho: “Can Ancient Qin Fingering Methods Inspire AI Engineering for New Musical Expressions?”

This short presentation explores the convergence of ancient Chinese qin-fingering methods and modern AI/ML techniques. The PhD project “qintroNix” reinterprets historical qin learning methods for contemporary art and music. The qin, a seven-stringed instrument with a three-millennia history, uses classifiers inspired by natural phenomena to depict fingering techniques. These classifiers, representing animals and plants, serve as mnemonic devices and metaphorical maps, linking physical movements with musical expression and philosophical models.

The project speculates on integrating these ancient techniques into modern AI/ML frameworks. Like ancient classifiers distilled natural phenomena into musical gestures, AI/ML algorithms extract and model features from large datasets. This parallel provides a framework for developing AI/ML systems with human-interpretable metaphors, enhancing pattern recognition and classification transparency.

Exploring phenological phenomena in qin music fosters an ecological consciousness, connecting musicians with an other-than-human world. This entangled approach aligns with contemporary efforts to bridge organic and digital realms; combining archaic knowledge with contemporary technology can unlock new possibilities for imagination, innovation, and a more profound understanding of music’s role in our world.

Viola Yip: “Liminal Lines”

In Viola Yip’s latest solo work “Liminal Lines”, she developed a solo performance with her self-built electromagnetic feedback dress. This feedback dress is made of non-insulated audio cables attached to a soft PVC fabric. These cables allow audio signals to pass through. The signal is first captured by an electromagnetic microphone, and then the captured signals pass through guitar pedals and a mixer, and eventually back to the dress to complete the feedback loop. Her body, when wearing the dress, facilitates a wide range of distances, pressures, and speeds through her physical engagement (touching, squeezing, stretching, etc.). These body-and-instrument interactions physically manipulate the interferences and modulations of the electromagnetic fields, which allow various complex sonorities to emerge and modulate over time.

In this symposium, she develops a new lecture performance with this dress, in which she will dive into her journey with physical touch, materialities and spaces within and surrounding the wearable instrument and the performer’s body.

Andrew McPherson: “Of algorithms and apparatuses: entangled design of digital musical instruments”

Digital musical instruments are often promoted for their ability to reconfigure relationships between actions and sounds, or for those relationships to incorporate forms of algorithmic behaviour. In this talk, I argue that these apparently unlimited possibilities actually obscure strong and deeply ingrained ideologies which lead to certain design patterns appearing repeatedly over the years. Some such ideological decisions include the reliance on spatial metaphors and unidirectional signal flow models, and the supposition that analytical representations about music can be inverted into levers of control for creating it. I will present some work-in-progress theorising an alternative view based on Karen Barad’s agential realism, particularly Barad’s notions of the apparatus and the agential cut. In this telling, algorithmic instruments are not merely measuring and manipulating stable pre-existing phenomena; they are actively bringing those phenomena into existence. On closer inspection, the boundaries between designer and artefact, instrument and player, materiality and discourse, are more fluid than they first appear, which offers an opening for new approaches to design of algorithmic tools within and beyond music.

Nicola L. Hein: “Cybernetic listening and embodiment in human-machine improvisation”

In this talk, I will show and discuss several pieces of mine which operate within the domain of human-machine improvisation using musical agent systems. The focus of this talk will be the changing parameters of cybernetic listening, interaction, and embodiment between human and machine performers. By using my own works, which employ purely software-based musical agents, visual projections and light, robots, and varying perceptual modalities of musical agents as a matrix of changing parameters of the situated human-machine interaction, I will argue for the importance of embodiment as a central concern in musical human-machine improvisation. The term cybernetic listening will help to further elaborate on the systemic components of musical performance and interaction with musical agent systems.

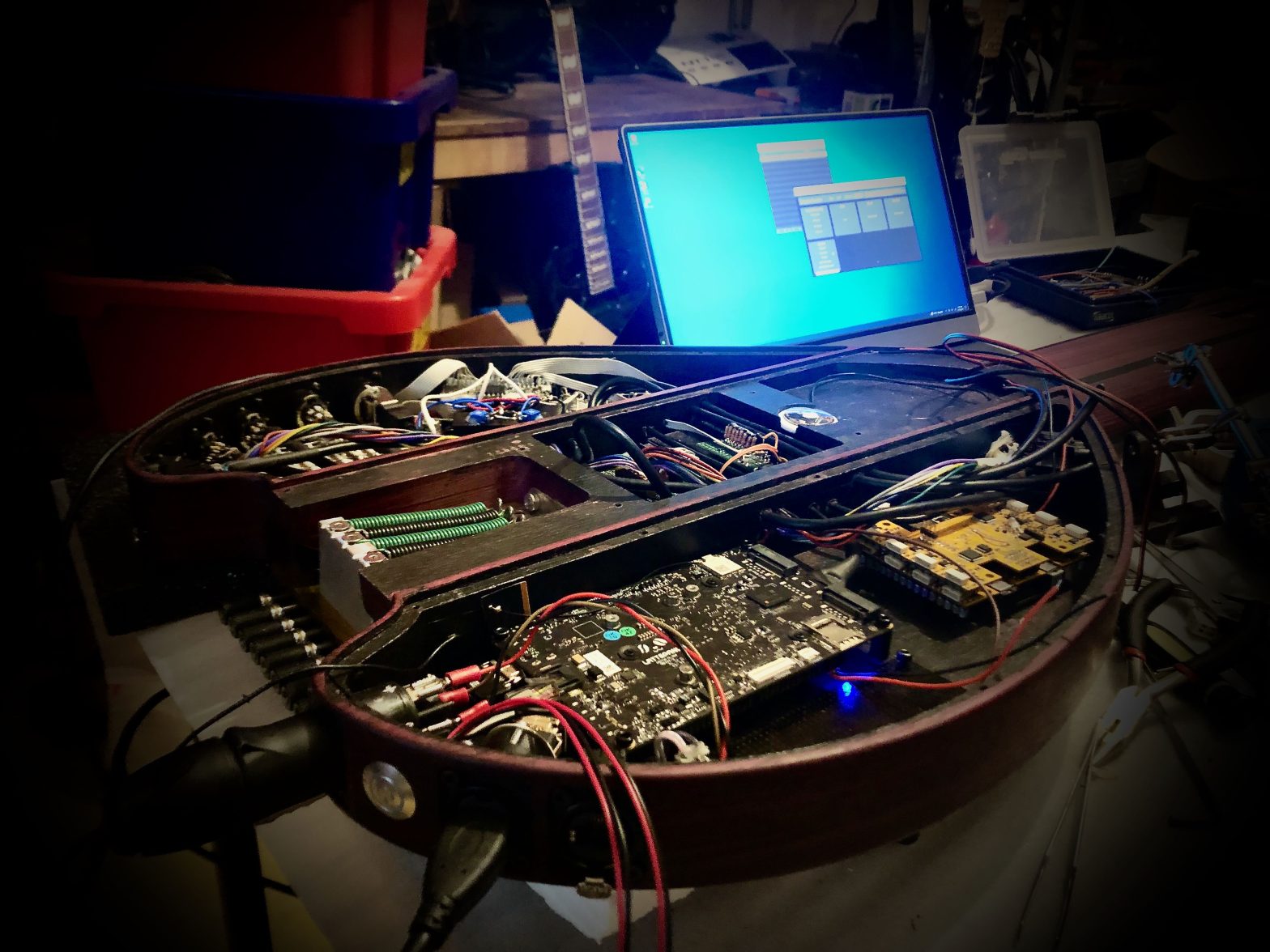

Federico Visi: “The Sophtar: a networkable feedback string instrument with embedded machine learning”

The Sophtar is a tabletop string instrument with an embedded system for digital signal processing, networking, and machine learning. It features a pressure-sensitive fretted neck, two sound boxes, and controlled feedback capabilities by means of bespoke interface elements. The design of the instrument is informed by my practice with hyperorgan interaction in networked music performance. I discuss the motivations behind the development of the instrument and describe its structure, interface elements, and the hyperorgan and sound synthesis interactions approaches it implements. Finally, I reflect on the affordances of the Sophtar and the differences and similarities with other instruments and outline future developments and uses.

Teresa Pelinski and Adam Pultz Melbye: “Building and performing with an ensemble of models”

How can we then treat machine learning models as design material when building new musical instruments? Many of the tools available for embedding machine learning in musical practice have fixed model architectures, so that the practitioners’ involvement with the models is often limited to the training data. In this context, the design material appears to be the data. From a developer’s perspective, however, when training a model for a specific task, a ‘model’ does not refer to a single entity but to an ensemble instead: the architecture is trained varying the number of layers, blocks or embedding sizes (the hyperparameters), and training-specific parameters such as learning rate or dropout.

For the last month we have been working on a practice-based research project involving the FAAB (feedback-actuated augmented bass), the ai-mami system (AI-models as materials interface) and a modular synthesiser. In this talk, we will discuss our process when building this performance ensemble, our insights in using machine learning models as design material, and finally, the technical infrastructure of the project.

John MacCallum: “OpenSoundControl Everywhere”

In this talk/demo I will preview OSE, a lightweight, dynamic OpenSoundControl (OSC) server designed for rapid prototyping in heterogeneous environments. Since its development over 25 years ago, OSC has been a popular choice for those looking to expose software and hardware to dynamic control. OSC itself, however,

is not dynamic—seamless integration of new nodes into an existing network is challenging, and OSC servers are not typically extensible at runtime. This lack of dynamism stands increasingly in the way of truly rapid prototyping in fields such as digital musical instrument design. OSE provides a solution to this challenge by implementing a general purpose OSC server that itself is an OSC server, allowing for dynamic control and configuration. I will discuss briefly some implications for design with such a system, the state of the current work, and its future.

Exhibitions

(the exhibitions will be briefly introduced right before the lunch break)

- Zora Kutz and Stratos Bichakis: Beads Beats

- Friederike Fröbel, Malte Bergmann: Student projects, MA Interface Cultures, Kunstuniversität Linz

Zora Kutz & Stratos Bichakis: “Beads Beats”

As one of the earliest forms of fabric decoration, found separately in cultures across the world, beading provides a rich history of techniques and materials that fit well with creating interactive textiles. Sensitive to touch and pressure, capacitive sensing allows for a range of input that can then be used for a variety of applications. In this project, we wish to create an artefact not unlike a musical instrument, used to control both synthetic soundscapes and the spatialisation of audio material. Along the process we are documenting findings for both the types of interaction we are researching, as well as the material and construction specifications.

Friederike Fröbel & Malte Bergmann: “Experiments with textile interfaces and found sounds”

This exhibition presents projects that explore meaningful sound interactions through textile-tangible interfaces using digital sound processing techniques. They were developed in two courses of the Masters Programme Interface Cultures at the University of Art and Industrial Design in Linz, Austria. The annual course explores the relationship between technology, fashion, craft and design with a particular focus on the idea of dynamic surfaces and soft circuits and how these can be realised through various textile processing techniques such as knitting, weaving, embroidery and many more. The course consisted of experimenting and exploring textile processing techniques using capacitive sensors and designing novel interaction prototypes with soft interfaces. Students worked on projects using Bela Mini, Trill Craft and Pure Data. Each student brought a personal field recording and a piece of clothing or textile to which they had a particular attachment. The materials students brought ranged from fish leather and stomach rumbles to suitcases and the rattling sounds of railway bridges. These materials would be ‘upcycled’ and combined as part of the group work, where they would be collectively combined to use soft textile sensing to generate expressive and playful ways of using clothing as a sound interface.

Performances

(~1 hour in total at the end of the workshop)

- Echo Ho and Alberto de Campo

- Adam Pultz Melbye and Teresa Pelinski

- Eliad Wagner

- Federico Visi

- Absolute Relativity – flatcat, Kraken, nUFO, and more, group and audience welcome to play

- Nicola Hein